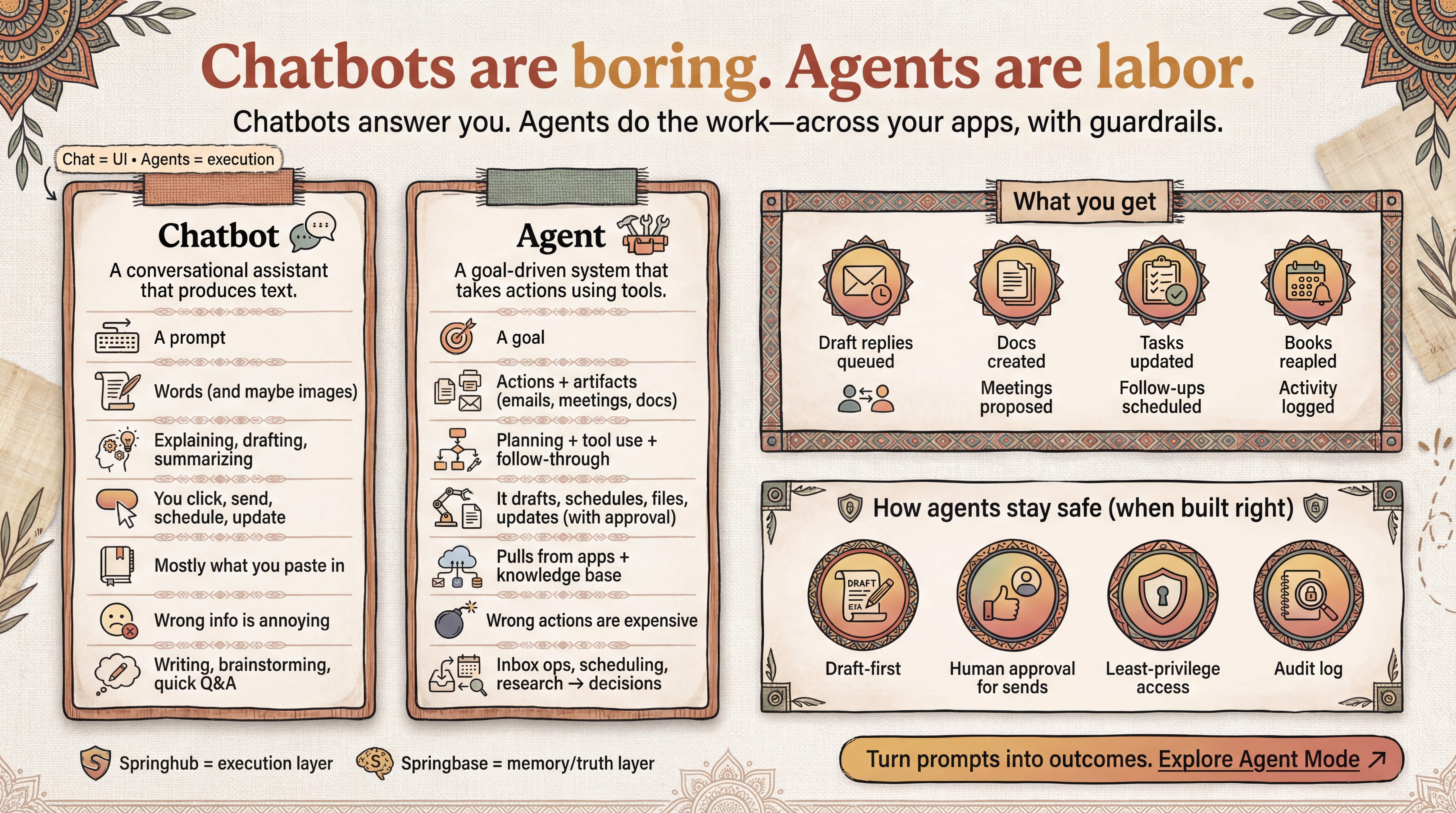

Chatbots are boring. Agents are labor. (And that should terrify you a little.)

A chatbot talks. An agent acts—across your tools, your files, your calendar, your inbox, your workflows—often with multiple steps, retries, and judgment calls. And once software starts doing labor, the impact isn’t incremental. It’s economic.

For the past two years, “AI” mostly meant a chat box that answers questions. Helpful, sure. But also… kinda quaint.

Because the real shift isn’t “chatbots got smarter.”

It’s this:

Chat is a user interface. Agents are labor.

A chatbot talks. An agent acts—across your tools, your files, your calendar, your inbox, your workflows—often with multiple steps, retries, and judgment calls. And once software starts doing labor, the impact isn’t incremental. It’s economic.

Let’s break down what’s happening, why it’s different, what can go wrong, and how platforms like Springhub + Springbase can make this useful (instead of chaotic).

1) What’s the difference between a chatbot and an agent?#

Chatbot (the old world)#

A chatbot is basically:

- Input: you type a question

- Output: it generates text (or maybe an image)

- You do the next step

It’s “assistance” in the same way a friend giving advice is assistance.

Agent (the new world)#

An agent is more like:

- Goal: “clean up my inbox,” “schedule a meeting,” “draft a report,” “ship a feature”

- Plan: breaks the goal into steps

- Tools: uses software tools (email, docs, calendar, GitHub, web browsing, etc.)

- Execution: takes actions, checks results, continues until done (or asks for approval)

OpenAI explicitly frames agents as systems that can perform tasks and execute multi-step workflows, not just respond in chat (OpenAI – Practical guide to building agents, OpenAI – AI agents use case).

Microsoft also draws a clean public-facing line between agents and chatbots: chatbots converse; agents complete work across systems (Microsoft – agents vs chatbots).

2) Why “agents” are not “a better ChatGPT”#

Here’s the spicy take:

Chatbots scale answers. Agents scale outcomes.#

A chatbot can help 1,000 people write better emails.

An agent can help 1,000 people never write routine emails again.

That difference matters because “doing the work” requires capabilities a chatbot doesn’t need:

- State: remembering what it already did

- Permissions: access to tools and data

- Reliability: error handling, retries, safe execution

- Judgment: when to ask, when to act, when to stop

- Auditing: what happened and why

OpenAI’s own “building agents” guidance focuses heavily on workflow design and tool use—not prompt cleverness—because agents fail in very un-chat-like ways (OpenAI – Practical guide).

3) The 3 killer jobs agents are already taking over#

A) Email: from “drafting” to “operating”#

Chatbot era: “Write a reply to this email.”

Agent era:

- read the thread

- identify the ask

- check your calendar

- propose times

- send the reply

- set a follow-up if no response

OpenAI’s connectors are a signal here: the model stops being “just chat” and becomes a system that can access and operate inside your communication layer (example: Outlook email/calendar connectors) (OpenAI Help – Outlook connectors).

B) Scheduling: the hidden tax agents eliminate#

Scheduling is boring, repetitive, and full of edge cases (time zones, conflicts, preferences). Perfect agent territory.

C) Research: from summaries to decisions#

Chatbot era: summarize 5 links.

Agent era:

- find sources

- read them

- compare claims

- extract structured notes

- draft a brief

- highlight uncertainty + what to verify

That’s not “writing.” That’s knowledge work execution.

4) The “agents are labor” mental model (the important part)#

Most people imagine agents as “a chatbot with buttons.”

Wrong.

The better mental model is:

An agent is a junior operator inside your software stack.#

It:

- takes a goal

- uses tools

- does multiple steps

- sometimes makes mistakes

- needs supervision and guardrails

And this is exactly why the agent wave is both:

- massively valuable

- mildly terrifying

Because labor implies:

- accountability

- cost

- quality

- security

- governance

If a chatbot gives you a wrong answer, you shrug.

If an agent:

- emails the wrong person,

- schedules the wrong meeting,

- deletes the wrong file,

- pushes broken code…

…that’s not “oops.” That’s an incident.

5) Why everyone is suddenly yelling about “agentic workflows” (and why they’re right)#

Developer tooling is one of the loudest early indicators of real agent adoption, because devs love anything that reduces repetitive work.

VentureBeat’s recent coverage of Claude Code updates is a good example: the product direction is about smoother workflows and smarter agents, not “better chat responses” (VentureBeat – Claude Code 2.1.0, VentureBeat – requested feature update).

That’s the pattern you should watch:

- not “new model, higher benchmark”

- but “new capability to do things end-to-end”

6) The dark side: agents fail in scarier ways than chatbots#

Failure mode #1: Confident wrong action#

Hallucinated text is annoying.

Hallucinated actions are expensive.

Failure mode #2: Permission overreach#

To be useful, agents need access:

- calendar

- docs

- drive

- Slack

- GitHub

That’s a massive blast radius. The more useful the agent, the more dangerous misconfiguration becomes.

Failure mode #3: Silent partial completion#

Agents can “mostly do the task” and leave landmines:

- created the doc but didn’t share it

- emailed but didn’t include attachment

- scheduled meeting but forgot timezone nuance

Failure mode #4: Tool brittleness#

APIs fail. Rate limits happen. Permissions expire. File formats break. Real agents need the boring stuff: retries, fallbacks, escalation.

OpenAI’s own agent-building guidance focuses on these operational realities because they’re the difference between a demo and something you can trust (OpenAI – Practical guide).

7) Where Springhub + Springbase fit (and why it actually matters)#

If agents are “labor,” then the winning platforms won’t be the ones with the fanciest chat UI.

They’ll be the ones that solve:

- context

- repeatability

- automation

- governance

Springhub: the “action + orchestration” layer#

Springhub positions itself as an AI companion that goes beyond chat, with agent automation, many models, and integrations (Springhub knowledge [1][2]). The key idea is: AI that can act, not just talk.

This aligns perfectly with the agent shift: once you can run workflows across apps, you’re no longer selling “answers”—you’re selling execution.

Springbase: the “memory + truth” layer#

Agents without grounded context are basically interns with amnesia.

That’s where Springbase helps: it acts like a structured, reusable knowledge layer so your agent isn’t reinventing the wheel every time:

- your writing style

- your product positioning

- your FAQs

- your internal docs

- your standard operating procedures (SOPs)

This is the difference between:

- “AI that sounds smart” and

- “AI that behaves consistently”

Springhub’s strength around knowledge bases and “context-aware” responses is directly relevant here (Springhub knowledge [2]).

If you want an opinionated one-liner: Agents without a knowledge base are just chaos generators with OAuth.

8) Practical beginner guide: how to start using agents without getting burned#

If you’re new to this, don’t start with “full autonomy.” Start with “assisted autonomy.”

Step 1: Pick one narrow workflow#

Good starter workflows:

- “Summarize my unread emails + draft replies for the top 3”

- “Turn this article into a LinkedIn post + a Twitter thread”

- “Research X and output a 1-page brief with sources”

Step 2: Add guardrails (non-negotiable)#

- approval before sending

- draft mode by default

- limited permissions

- clear logs of actions taken

Step 3: Make it repeatable with Springbase#

Store:

- templates

- checklists

- tone guides

- your “definition of done”

That way the agent becomes less like “creative improv” and more like “operational machine.”

9) The real conclusion (provocative, because you asked)#

You’re not watching the rise of smarter chatbots.

You’re watching the rise of software that performs labor.

And society is hilariously unprepared for how fast that changes:

- jobs (obviously)

- trust (quietly)

- security (painfully)

- productivity (unevenly)

The winners won’t be the companies with the cleverest model.

They’ll be the ones who can turn agents into safe, repeatable, context-grounded workers.

That’s why the Springhub + Springbase combo is interesting: it’s not “another chatbot.” It’s a setup aimed at the real game—execution with memory

Related Posts

Kimi K2.5 Just Dropped — and it’s already living rent-free on springhub.ai

K2.5 is amazing when you need big context, deep reasoning, or multimodal workflows. But Springhub lets you choose the right model per task—so you can go cheap + fast for quick drafts, then go heavy for the “this has consequences” work.

The Hiring Score War: Is Your AI Resume Grade Illegal?

If your hiring product shows candidates a neat “85/100” score, you might already be operating in credit-bureau territory—legally, not metaphorically. Recent lawsuits are pushing courts to treat AI “suitability scores” like consumer reports, which means old-school rules (think FCRA) suddenly apply to modern ML pipelines. That changes everything: disclosure, written consent, accuracy obligations, and—most dangerously—adverse action notices when someone is rejected based on an algorithm. For HR-Tech founders, this isn’t a compliance footnote. It’s a product requirement that can make the difference between a scalable platform and a class-action magnet.

The Hidden Cost of AI Subscription Sprawl (And How to Cut 70% of It)

Stop paying for the same AI three times. Your marketing team uses Jasper, your product team uses Claude, and everyone has a ChatGPT Plus account. If your company is like most, you’re paying for the same generative capabilities under four different brand names. We’re breaking down the "847/Month Problem" and providing a step-by-step decision matrix to help you consolidate your tools, fix your workflow friction, and stop the subscription leak for good.